AppFabric Caching (aka Velocity Caching) - Microsoft .NET

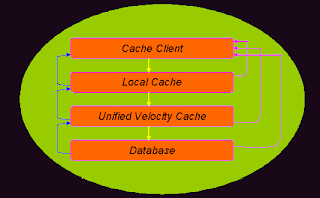

Caching is an essential component to speed up any web application by minimizing the number of trips to the actual database. AppFabric Caching or Velocity caching is a distributed caching system that leverages the caching infrastructure available across a cluster of cache servers efficiently to enhance application performance as well as scalability. The secret behind the effectiveness of appfabric caching lies in the fact that duplicate data is not cached by the cache cluster because whenever data is requested by any client, it is first looked up in the unified logical cache and if available the data that reaches the client can be from the physical cache memory of any of the servers in the cluster.

Velocity caching is implemented in a synchronized tier and the service can be accessed across the network with the cache servers in the cluster running the process (DistributedCache.exe). Even though several cache servers contribute to the shared cache memory, there is a common gateway for data to enter and exit in the form of a centralized and shared cache. The distributed cache is handled in code with the help of simple get and put methods that will retrieve, insert, update or delete data items in the unified logical cache as a whole. The programmer is not worried about the internal implementation or the physical location of cached data in the cluster.

Notable Aspects:

- Each server in the cluster stores the session object data in its RAM and does not write it to disk for obvious reasons

- No code change or new code has to be incorporated by the programmer and all that is needed is to change a configuration setting in order to activate velocity caching

- Distributed caching means multiple web servers can each run an instance of the same application which helps in load balancing

- Both web server (IIS) and cache server (Appfabric) can be deployed on a single application server running on Windows Server OS

- Turning on the high-availability feature helps mitigate situations when a server in the cluster goes down by storing a synchronous secondary copy of the data item on a different server

One of the key challenges for the implementation of velocity caching involves managing parallel access to data that changes rapidly and velocity caching offers different concurrency models suitable for the concerned application. Controlling access to sensitive data among the clients is another challenge which is controlled by limiting access to such data with encryption or restricted accounts. Appfabric caching attempts to maximize the benefits from the underlying computer infrastructure with the help of a distributed yet shared architecture to provide value for both application developers and end users. Things can only get better from here.